The first thing that is most important to point out; is, that it’s very, very important not to oversimplify things.

The world is entirely invested in its use of ICT and to bring about a radical change, whilst examples of how this has happened in other areas can be shown; is nonetheless, extremely complicated. This is the reason why i’m working on putting this website together. I recon, i can help make it more accessible, irrespective fo the complexities more broadly.

Furthermore; i have some particular views which are often shared with some, elements are often disagreed with by some whilst agreed to by others; and frankly, these are my views not anyone else’s. If someone wants to go do something that’s a better design, go for it. My method, has sought to ensure the basic infrastructure does not lead to asserting the right of any commercial entity to demand royalty payments from humans to live (that’s the job of governments, and its called ‘tax’).

A summary of my design methodologies (at present) are as follows;

The way this thing works is by making use of machine-readable data formats for storing information. Data in its most basic form, for traditional computers, ends-up being processed in binary. Languages are built to change the way software is developed (so they’re not authoring code in a binary format); and some of those languages, offer the means to produce software where the human readable information produced, is also machine-readable; in a format, that enables interconnected computing systems to understand the meaning of what is communicated in the file or document. Other systems exist, that help convert and change the way a single object (for instance a picture) can be interpreted by computers. for instance, an image can be processed to identify any persons (and their faces), objects, location, time and an array of other information. Audio, can be processed to transcribe to text, and to analyse the type of content alongside other things.

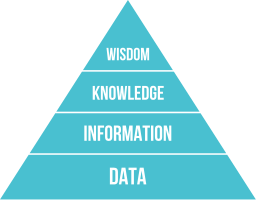

Accumulatively, the underlying processing capabilities all flow back to the means through which data, as information, is processed to be able to be meaningful. Yet this in-turn brings about the next challenge, which is how to uplift information to a juncture where it can reasonably be considered ‘knowledge‘, and the overall idea is that the person who is managing their own inforg is the primary agent using semantics to define what wisdom means to them, and for them, as is recorded temporally overtime by computing systems networking and communicating information in relation to them, one way or another.

Given ICT is predominantly operated in an ‘institutionally centric’ mode today, most commonly, the term ‘identity’ is used in relation to systems that relate to people, whereby the intended intepretation of the term, for consumers, is that a person is assigned a binding service by a legal personality of some sort. Whilst it is the case that these services are indeed essential, the implication is moreover one of confusion.

In my ‘knowledge banking industry’ methodologies, the modelling used is distinct. Therein, whilst legal personalities are amongst the ‘things’ that are supported by a ‘human centric’ architecture framework; the framework itself considers, the distinct needs of humans by way of considerations made in relation to the term ‘inforg‘, and the meaningful use of AI through services provided by knowledge banking providers (that are perhaps something similar to the ‘information fiduciary’ concepts, discussed elsewhere).

By providing the means for people to have control over how they’re generating their own semantics, as is made possible by being made able to beneficially own and operate data relating to them; including how links are rendered and processed with a plurality of agents in any query (overtime) on a dynamic basis; the needs of the consequential, decentralised ‘information management’ ecosystems, brings about an array of functional requirements that can be addressed by incorporating the use of a few distinct constituents; designed to provide interoperable and synergistic, permissive and authoritatively enhanced; data-flows.

The Key constituent elements identified to be required; to bring this about are,

- Commons (ie: open data)

- Things (and the data generated by them)

- ‘Identity Instruments’ (somewhat described here by IBM as otherwise known as ‘verifiable claims‘)

- Content & relationships produced by self and others.

- AI Systems (and there’s a lot of this stuff that’s too complicated to get into in this post).

Commons, or ‘open-data’ is about things like languages and any means to describe anything of the natural world. It is the knowledge of humanity and our natural world. It is our constructs of law and other critical infrastructure required for any information management system; to function. Emerging technology is developing the underlying considerations to what could and should be ‘commons’. One example can be considered in relation to the advancements of computer vision technology that has an array of applications from those relating to biomedical research and services, through to facial recognition and new types of interfaces that are required for head-mounted, augmented reality displays; alongside similar functional capabilities that are built into vehicles, CCTV management systems; alongside other media platform applications. Whilst the means to preserve the right to ‘make arrangements’ surrounding the use of a persons own biometric signatures should, reasonably (subject to law) be made the sole right of the person (or ‘data subject’); these sorts of attributable rules are dissimilar to considerations that might be made about the means through which persons are able to identify flora and fauna; alongside the means to identify microscopic diseases, or other means through which ‘commons’ attributions may be beneficially applied. As a related consideration, the means through which these systems work, is based upon the use of URIs. There are two main reasons why this is important. The first reason, is that a single RDF document may contain a multitude of URIs resources from public and private sources; made accessible to an authorised agent, over a multitude of protocols. An example of how this is achieved can be shown by reviewing the DID info. The second reason is about decentralising discovery and maintaining privacy by way of making use, of decentralised ledger technologies. The ramifications of doing so, means that a private (“secret”) query can be achieved, without having to process the communications event across the infosphere; but rather, leverage off the ‘knowledge banking infrastructure provider’, to support the means of an individual to maintain their interests, as is the purpose of the knowledge bank itself.

The specified means through which this might be achieved (ie: a few examples) is discussed elsewhere in the site (or will soon be).

Things are literally things;

- Things we connect to internet – things that interact, with internet.

- Things we call Companies (legal personalities)

there are all sorts of things. Some forms of things are able to be integrated using the RDF based (noting DID’s afore mentioned above) Web of Things tooling. Other forms of things, like a persons involvement with a company, can better be supported through the use of ledger technology, which in-turn also makes use of RDF to associate the activities of persons in their role with the legal personality; or project (ie: the production of a product) otherwise administered in a new and innovative way using technology.

Identity Instruments are electronic claims that are issued socially, most-often, between legal personalities as is intended to be applied to natural persons or other legal personalities. Examples include the various embodiments of ‘verifiable claims’ that can be referred to having been provided ‘trusted support’ as a representation of ‘fact’ from a birth, university or telecommunications record. Others can include the ownership details of a thing, such as a motor vehicle; and related ‘verifiable claims’ that may relate to the registration and insurance of that asset. Yet another form may be the means to identify that a person is the legal tenant or occupier of a particular property; and that they are therefore entitled to define how and what ‘virtual items’ are able to be put upon the vicinity of their property; in the same sort of way a local council or government body may do the same, to ensure no virtual entertainment objects are encouraged to be engaged with via mobile phones, on freeways or other dangerous places.

The collection of all these things in a socially connected web, alongside all the stuff people create all by themselves, and the derivatives of the things created in relation to the other stuff that’s all stored together, in a format that supports version control – is called an Inforg. The entire emboidment of information stored in a persons Inforg, in addition to the materials that are connected to it; form the means through which AI systems are then employed, and programmed, to form the informational representation of an organic entity, the human being whose ‘inforg’ it is, to whoever it is that is engaging with it, on a dynamic basis; that is designed to be principally designed to be curated, by its ‘data subject’.

The purpose of a ‘knowledge banking industry’ is to supply the apparatus required for humans, to build and manage their inforg. To use the information that is able to be stored in it (as a consequence of any such type of thing existing); and to thereafter, build their own rules about how it can be used by others; and as a consequence of doing so, define their own AI infrastructure, that works socially with others, on AI infrastructure, as their own form of design and definition of what it needs to do for them. These designs are not about supporting the means for people to break the law. Whilst the design intends to support the needs of law, as defined by the people (through means enhanced by having produced such form of apparatus); the broader consideration, is that it limits the otherwise significant use and manipulation of data in its variety of presented forms, as is otherwise exploited inexorably by agents, world-wide.

This ‘knowledge banking’ technical ecosystem; in turn provides improves means to make use of AI for the betterment of humanity and the natural world. The means to apply the use of data to enhance research in areas such as healthcare and social policy, is enhanced greatly should an industry of providers be established, as to anonymise research request results and to deliver to relevent agencies, a multitude of aggregate results; that are in-turn, collectively processed as has been provided through the comprehensive availability of ‘point data’ sourced from those willing to participate; which in turn forms a far more comprehensive means to contribute towards the availability of ‘commons’, that can be generated by way of the ‘knowledge banking infrastructure’, in a manner that preserves privacy. Yet the design paradigm has been technically designed by taking into account far more than simply computer science considerations. Whilst the broader field through which such concepts are thought about, is generally called ‘web science’, the field takes into account an array of considerations most importantly including those that relate to our systems of law, and the role of language in any undertaking of ‘ontological design’.

The way the ‘Privacy‘ & ‘Dignity’ (ie: see UDHR) issues that otherwise plague our societies can be solve by a knowledge banking industry; is that, by the institutional nature of the proposed ‘regulated’ industry, not operated by government; it does in-turn provide the means to preserve separation of powers. Due to the decoupled nature between the legal rules that apply to data, vs. the legal jurisdiction that applies tot he use of applications (like it was in the days before internet); the system of government that applies to the individual to whom the inforg is about, also applies to the use of their data; and any differences between jurisdictions (ie: USA law vs. AU law, in relation to a concept, such as ‘copyright’) can be resolved through the use of semantics. The problem today, is that our ‘information management systems’, provide a foundation that is not ‘fit for purpose’ as they are now currently operated today. This can change, if it is sufficiently deemed meritorious, to do so. The ramifications are therefore; how to economically communicate the benefits of doing so, which is yet another complex constituent of the broader ecosystems challenge. Technology has changed so rapidly, many of our elders still struggle. Yet without appropriate leadership, the future for those they care for (and those who will need to care for them) may not be so good.

The reason why the problem is so hard to solve, is that the rudimentary nature through which the idea of changing (or offering an alternative) to the way ‘information management’ works socioeconomically; impacts everything. It is not simply a ‘widget’ or an ‘app’, it is a change that is as significant, as the introduction of the internet, or the banking industry itself, that in the 1600s decided, humans needed a means to independently be provided the beneficial and protected use of a bank account.

In 2014, Sir Tim Berners-Lee considered related works and considerations in his talk about forming a ‘magna carta’ for the web.

His views are in-turn able to be considered in relation to the advancement of what the term ‘personhood’ has been an area of social policy development over the period of living memory.

Making matters more complicated, the way the internet is predominately made use of today; the differences between the considerations made by sovereign Jurisdictions, that does not easily preserve the broadly accepted principles of ‘rule of law’ or those such as have been asserted by the Charter of the Commonwealth.

Other methodologies considered in relation to the way these sorts of problems may be solved, include the idea of personal servers, as is notably pursued for good purpose by the freedom box foundation. Whilst this work is indeed very important (and interoperable); the issue that they cannot solve include the means through which address-space governance is managed in relation to IANA, and the means through which to ensure domestic law-enforcement requirements are supported; whilst privacy preserved, alongside the need to define a methodology that supports ‘Separation of Powers‘.

The technical design paradigm employed does thereby respond to these complex issues by producing a methodology, not dissimilar to ISPs, where provider would have tens of thousands, if not hundreds of thousands or millions of members; whose analytical fingerprints can become eradicated when generating statistical reports involving so many data-subjects over a variety of data-points.

These systems are intended to be operated legally, where the default circumstance is that market-based providers can be elected by citizens and those citizens can change providers if they want to (which in the ISP industry, is termed ‘Churning‘; that the industry is regulated, that it is the safest provider type to store information and/or that their role is to act as to protect the dignity of others. Using URI based information standards, it doesn’t matter where data is stored, applications permissively network.

Moreover; the benefits of building a institutional ‘knowledge banking industry’, is that the date-stamps and related electronic forensic tooling required by persons, to ensure they can ‘walk into a court room’ and have their data examined simply, as to seek meaningful utility of ‘rule of law’ is immeasurably assisted.

An old (perhaps incorporating a few errors) diagram is outlined below; i hope its somewhat illustrative…